Quick Summary

Voice-first interaction and gesture monitoring are not simply an interface design innovation. It is a total redefinition of user interactions with the application. Imagine spending tons of money on a highly functional application and finding out that your users are leaving it, not due to performance factors, but because it simply no longer fits with the way they expected to interact with the application. Users don’t want complex icons or more submenus within menus. What they look for in an app is the ease of access. One effective way to achieve this is through voice and gesture interfaces integrated with the UI/UX design services.

Introduction: The Shift Towards Voice and Gesture in UI/UX

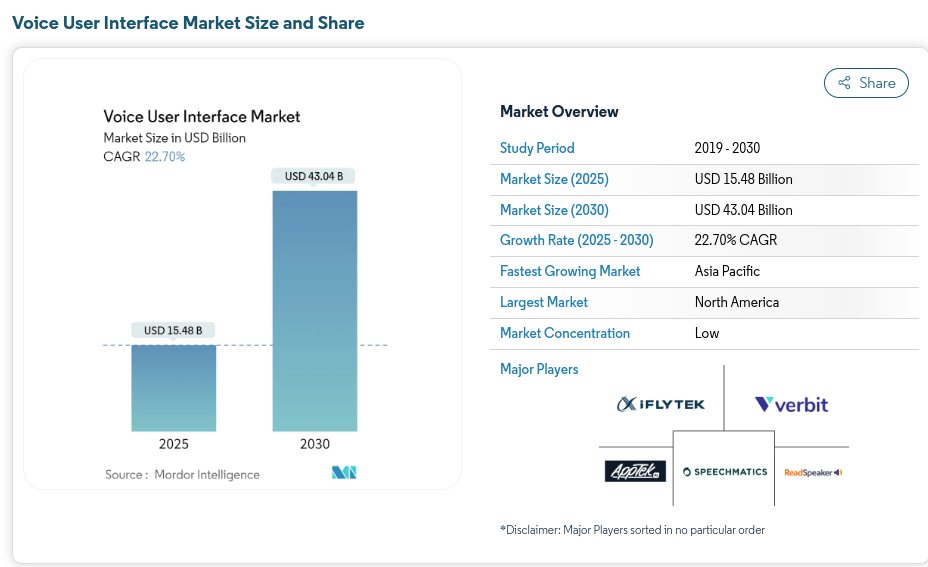

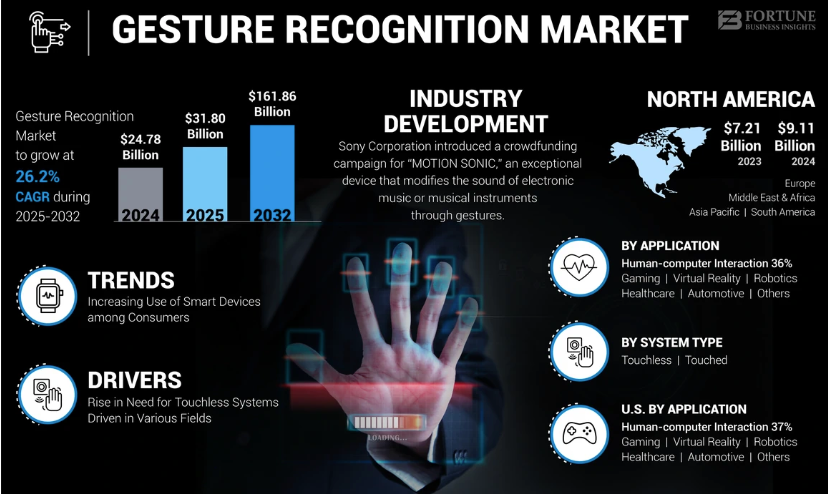

Digital voice assistants have reached 8.4 billion active users worldwide, and around 71% of mobile users choose voice interfaces for fast hands-free interactions. According to the latest stats, the voice user interface market may reach USD 43.04 billion by 2030, also the gesture recognition market might reach USD 161.86 billion by 2032.

This splurge in the market reflects that various sectors and customers demand better human-computer interaction methods. This demand has basically created an urgent need for voice and gesture interface design to become part of all modern UI UX design services.

But as a decision-maker, which voice and gesture interface trends should you integrate with your current UI/UX roadmap? Let’s find out!

Evolution of Human–Computer Interaction: From Touch to Touchless

History shows that every innovation in human-computer interaction has been directed at removing intermediary objects. Touchless technologies emerged as a result of the gradual reduction in the number of devices needed for control, ultimately leading to their elimination.

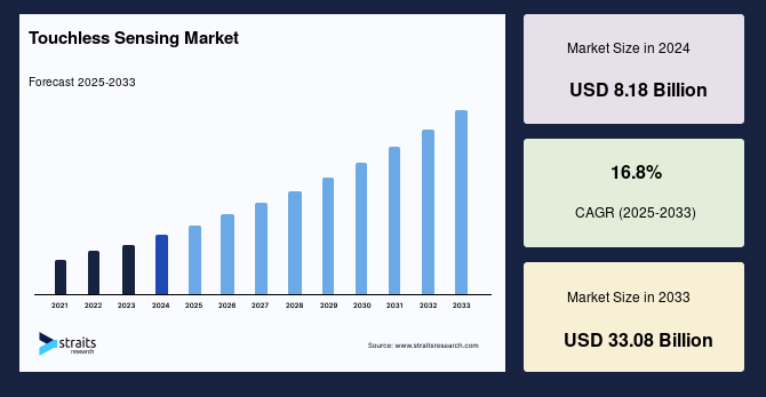

As the world experienced the pandemic, individuals began to care about personal hygiene more. People are more cautious of the objects and surfaces they touch and use. Thus, the emergence of touchless technologies is natural. Gestures and voice commands have already become a common feature of communication with devices, primarily with the appearance of touchscreens. As per statistics, the market for touchless sensing will reach USD 33.08 billion by 2033.

Why Businesses are Integrating Voice and Gesture Interfaces with UI UX Design Services

Today, a majority of businesses are investing in UI and UX design services for voice and gesture interfaces. Reason? It offers more accessibility, convenience, and ease for customers and users to conduct efficient interactions at home, work, or while on the go.

Allows multitasking: You can now do multiple tasks at the same time. For example, you can give a voice command to a smart device to play a song while cooking or doing some other work in parallel. Or simply wave a hand in front of the screen to change the song.

Allows multitasking: You can now do multiple tasks at the same time. For example, you can give a voice command to a smart device to play a song while cooking or doing some other work in parallel. Or simply wave a hand in front of the screen to change the song. Ease of use for disabled persons: These voice and gesture controls are especially helpful for people with disabilities. People suffering from physical disabilities can just ask the smart home system to switch on the lights instead of taking the effort to go toggle the switch.

Ease of use for disabled persons: These voice and gesture controls are especially helpful for people with disabilities. People suffering from physical disabilities can just ask the smart home system to switch on the lights instead of taking the effort to go toggle the switch.

The Role of AI and Machine Learning in Enhancing Interaction Accuracy

Virtual Controllers with Voice Assistance Bot shows a new way for people to interact with computers by combining gesture recognition, virtual control, and voice commands. Using machine learning and AI-powered voice recognition, this system lets users control digital devices without touching them. Thus, making it easy, efficient, and accessible for use. It works on a regular computer with just a webcam and microphone, so no special hardware is needed. This is how a computer uses AI and ML to detect voice and gesture:

Using natural language processing (NLP), the AI understands and detects the voice content and context.

Using natural language processing (NLP), the AI understands and detects the voice content and context. The computer vision (an ML feature) can quickly record and detect body gestures of the hand or face, using the web camera. And understands the meaning according to the trained models.

The computer vision (an ML feature) can quickly record and detect body gestures of the hand or face, using the web camera. And understands the meaning according to the trained models. It responds according to the actions as trained and, using predictive learning algorithms, it learns new patterns to provide a more intuitive experience to the users.

It responds according to the actions as trained and, using predictive learning algorithms, it learns new patterns to provide a more intuitive experience to the users.

Multimodal Interfaces: Voice, Gesture, and Visual Design

Multimodal interfaces let users interact with technology through different types of input, like voice, gestures, touch, and visuals. The goal is to make the user experience feel more natural and intuitive, similar to how people communicate with each other. Key components of multimodal interfaces include:

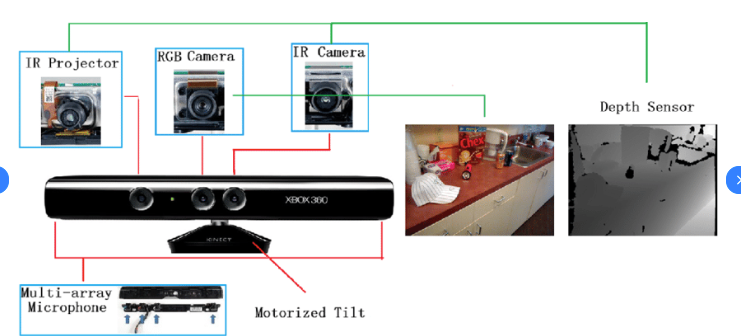

Gesture recognition involves interpreting movement, for example, hand movement or body position, and using these gestures to control devices or applications. For example, gaming consoles use gesture recognition in consoles like the Xbox Kinect for providing users with a more immersive gaming experience.

Gesture recognition involves interpreting movement, for example, hand movement or body position, and using these gestures to control devices or applications. For example, gaming consoles use gesture recognition in consoles like the Xbox Kinect for providing users with a more immersive gaming experience. Speech Recognition Technology enables users to speak to a system, which will recognize and process the user’s speech. Natural Language Processing takes speech a step further by understanding context and intent related to spoken words to engage with users in a more meaningful and precise way.

Speech Recognition Technology enables users to speak to a system, which will recognize and process the user’s speech. Natural Language Processing takes speech a step further by understanding context and intent related to spoken words to engage with users in a more meaningful and precise way. By recognising facial expressions, systems could estimate a user’s emotional state and respond appropriately. For example, some customer service bots can alter their responses depending on how frustrated or satisfied the user is.

By recognising facial expressions, systems could estimate a user’s emotional state and respond appropriately. For example, some customer service bots can alter their responses depending on how frustrated or satisfied the user is. Haptic feedback gives users a sense of touch by creating physical sensations like vibrations. You’ll often find haptic feedback in smartphones and gaming controllers, where it matches what’s happening on the screen.

Haptic feedback gives users a sense of touch by creating physical sensations like vibrations. You’ll often find haptic feedback in smartphones and gaming controllers, where it matches what’s happening on the screen. Brain-Computer Interfaces (BCIs) allow people to control devices just by thinking. This technology connects the brain directly to external gadgets.

Brain-Computer Interfaces (BCIs) allow people to control devices just by thinking. This technology connects the brain directly to external gadgets.

Gesture Interface Design: Principles, Technology, and Industry Applications

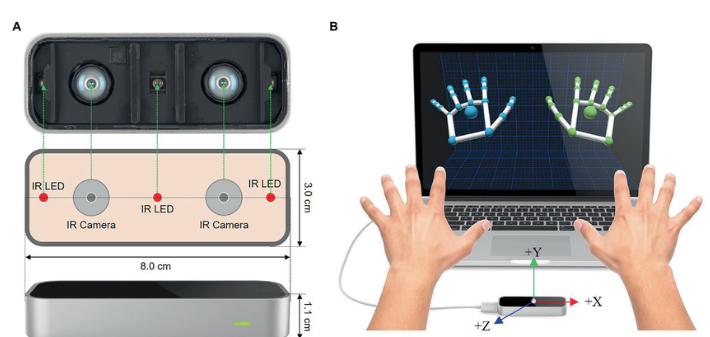

Gesture control is a technology that enables users to engage with virtual objects without physical contact. Systems with cameras and infrared sensors detect human gestures and movements of fingers, hands, the head, or the full body, then quantify them into systematic commands using mathematical algorithms.

Touchless Tech is built on the foundations of motion tracking, depth sensing, and AI-enabled gesture recognition technologies. Some core technologies are driving this space, including:

Leap Motion: Uses infrared sensors to facilitate precise tracking of hand movements and gestures.

Leap Motion: Uses infrared sensors to facilitate precise tracking of hand movements and gestures.

Microsoft Kinect: Initially developed as a gaming system for Xbox, and subsequently adopted for full-body motion tracking applications such as in gaming and, healthcare industries.

Microsoft Kinect: Initially developed as a gaming system for Xbox, and subsequently adopted for full-body motion tracking applications such as in gaming and, healthcare industries.

Intel RealSense: Detects gestures with 3D depth mapping capabilities of position, gestures, and facial expressions.

Intel RealSense: Detects gestures with 3D depth mapping capabilities of position, gestures, and facial expressions. Apple’s TrueDepth Camera: Detects and tracks movement of a user’s face by mapping and analysing over 30,000 invisible dots. The system is used in the Face ID application.

Apple’s TrueDepth Camera: Detects and tracks movement of a user’s face by mapping and analysing over 30,000 invisible dots. The system is used in the Face ID application.

Industry Applications

Gesture-based user interface applications have been adopted in the automotive, healthcare, consumer electronics, gaming, aerospace, defence, and related end-user sectors.

Currently, gesture-controlled games are also available in the App Store. One gesture-controlled game is Don’t Touch, which uses gestures that the mobile device’s camera detects.

Currently, gesture-controlled games are also available in the App Store. One gesture-controlled game is Don’t Touch, which uses gestures that the mobile device’s camera detects.  GestureTek was one of the earliest and industry leaders when it came to developing video gesture control virtual reality solutions in museums, science centres, amusement parks, etc.

GestureTek was one of the earliest and industry leaders when it came to developing video gesture control virtual reality solutions in museums, science centres, amusement parks, etc. The best gesture-controlled app available on iOS devices is Touchless Browser, which allows users to interact with the app remotely using hand gestures that the camera detects.

The best gesture-controlled app available on iOS devices is Touchless Browser, which allows users to interact with the app remotely using hand gestures that the camera detects.

Voice Interface Design: Best Practices, Use Cases, and Accessibility

Voice interfaces use speech recognition technology and advances in natural language processing (NLP). A microphone picks up sound waves, and a speech model processes them. It filters out noise and breaks the sound into small pieces, each about 25 milliseconds long. These pieces are then matched with words, allowing the system to understand what was said and perform the requested task.

This technology is used in voice assistants, voice-controlled devices, and transcription services. It’s becoming more common in industries like IoT, automotive, and finance.

But what makes a voice interface unique is that you can’t see it. It is typically used in industries like home devices, healthcare, and entertainment, where you can easily communicate your tasks with voice commands and get results without any delay. For example, when running a smart home, it’s convenient to give voice commands to a voice assistant like Alexa or Siri, which connects to all your home devices. Or in the vehicle, voice interfaces allow you to adjust climate control, music volume, decline calls, and ask for directions without taking your hands off the wheel. According to the report by GlobalData on in-car voice assistants, there have been over 720,000 patents lodged and granted in the automotive sector in the last 3 years.

Challenges and Solutions in Voice and Gesture UX Development

Like other technologies, there are some limitations with gesture and voice interfaces that need to be addressed in order to utilise them effectively.

1. False Activations

How does a recognition system differentiate intentional gestures from unintentional gestures? This is a difficult task that needs to be perfected. For example, if a driver gestured with their hand unintentionally while they were driving a moving vehicle, and it was interpreted by the smart gesture technology, then this could pose a serious safety concern.

To improve accuracy, developers need to refine the following:

Gesture tracking algorithms are used in order to lessen false positives.

Gesture tracking algorithms are used in order to lessen false positives. AI-based motion analytics to incorporate user-specific behaviour.

AI-based motion analytics to incorporate user-specific behaviour. Environmental adaptability, ensuring gestures work in various lighting and background conditions.

Environmental adaptability, ensuring gestures work in various lighting and background conditions.

2. Cultural Differences

Understanding how gestures are interpreted differently based on culture, age, profession, etc, is quite a challenge.

Here is how you can simplify gesture complexity:

Use ‘universal gestures’ and intuitive gestures (i.e., wave = “dismiss”, not several hand signs).

Use ‘universal gestures’ and intuitive gestures (i.e., wave = “dismiss”, not several hand signs).

Minimize gestures to avoid overwhelming users (too many require remembering).

Minimize gestures to avoid overwhelming users (too many require remembering). Integrate gesture suggestions; the system will suggest possible interactions based on context.

Integrate gesture suggestions; the system will suggest possible interactions based on context.

3. Data Security

In the event of successful voice recognition, hackers can utilise your voice for many forms of breaches. Voice interfaces are useful when the normal way of accessing devices isn’t practical. Here’s the solution to avoid a data breach in voice recognition:

Create a robust end-to-end encryption platform.

Create a robust end-to-end encryption platform. Ensure that you integrate strong speech recognition approaches that also adhere to data security and privacy compliance regulations.

Ensure that you integrate strong speech recognition approaches that also adhere to data security and privacy compliance regulations.

4. Unclear Functionality

We do not know the functionality of the voice interface before we test it ourselves. As there is no visual instruction, the user cannot know at first what tasks the voice interface can accept. Here’s how to solve this problem:

Give the users the freedom to input gestures that suit them and the way they act naturally.

Give the users the freedom to input gestures that suit them and the way they act naturally. Track to which gestures the UI/US is responding. Try variations; this will help the system to learn and adapt to new gestures using ML.

Track to which gestures the UI/US is responding. Try variations; this will help the system to learn and adapt to new gestures using ML.

Read also : The Rise of Voice UI: What UX Designers Need to Know

Future Trends: Emotional AI, Screenless Experiences, and AR/VR Integration

Gaming platforms recognise the potential of gesture-based controls to deliver immersive screenless experiences. Moreover, with seamless VR and AR integrations, the UI/UX interfaces will leverage whole-body motion capture and AI-optimised gestures to provide hyper-realistic experiences. Gesture recognition systems will increasingly become precise and adaptive as they continue to evolve with AI. Chatbots and virtual avatars will be able to recognise and reply to user emotions, facial expressions more efficiently, which may even look like a natural response, just like that of a human.

Conclusion: Partnering with the Best User Experience Design Agency

Human-device interaction has always been revolutionised with technologies, and voice and gesture-based interfaces are a modern approach. Despite the accelerating rise of voice and gesture technologies, classic graphical interfaces remain essential. Today, we predominantly encounter a combination of the voice, gesture, and graphical (touch) interfaces. This perfect combination of these strategies provides a wider range of capabilities and is adaptable to various user needs.

Bring your app to life with voice and gesture controls! At Rainstream Technologies, we design intuitive, visually engaging interfaces that make every interaction effortless. Talk to our experts today and elevate your user experience.

FAQs:

What is the role of voice and gesture interfaces when integrated with UI/UX?

What is the role of voice and gesture interfaces when integrated with UI/UX?

The voice and gesture interfaces allow users to interact with the system using voice commands or simple hand or body gestures. This eliminates the need to touch or type on the interface, thus saving time.

Is it possible to use voice and gesture interfaces together?

Is it possible to use voice and gesture interfaces together?

Yes, by using multimodal interfaces, at a time you can use voice, gesture, or interact with other visual elements simultaneously.

Which are the most popular industries that use voice and gesture interfaces with their UI/UX?

Which are the most popular industries that use voice and gesture interfaces with their UI/UX?

Gaming, entertainment, healthcare, automotive, and retail are the most popular industries that integrate voice and gesture interfaces.